Every Executive's AI Dilemma: How to Move Fast Without Getting Burned

AI is powerful and risky. While your competitors are rushing headfirst into AI and hoping for the best, smart leaders are using a proven risk assessment tool to pick the right opportunities.

Every executive I meet asks the same question: 'How do we get started with AI without getting burned?' They know AI isn't optional—it's already reshaping their industries. They know that AI will increasingly become part of how we work and live and in the long run will drive fundamental change at the personal, organizational, and societal level. But they also know the headlines: discrimination lawsuits, regulatory fines, catastrophic AI failures. The pressure to act is enormous, but so are the stakes.

We do not know how it will all end. The technology is evolving so fast that it is hard to keep up. Some CEO’s are advocating for an ‘AI First’ approach, prioritizing the use of AI in all aspects of an organization.

As a leader, you're caught between two imperatives: move fast enough to stay competitive, but move smart enough to avoid becoming a cautionary tale. Your board is asking questions. Your competitors are making moves. Your teams are eager to experiment. But without a systematic approach to AI risk, you're essentially flying blind.

Why Every Executive Should Care About AI Risk

AI is a transformational technology - and transformational technologies are risky. Depending on the application, for AI the risks could be legal, regulatory, operational, cyber, reputational, or ethical. AI systems—especially in high-stakes or regulated environments—can fail in strange, invisible, or catastrophic ways. FMEA helps make those risks visible before they cause harm. FMEA is a great way to think about everything that could possibly go wrong - and with AI a lot of things could go wrong.

As a leader, you need to understand, mitigate, and manage those risks to avoid costly mistakes, reputational damage and legal exposure. Here are just a few:

Business-Critical Risks: Bad decisions at scale, skill erosion, competitive disadvantage

Legal & Regulatory Risks: Discrimination claims, copyright violations, regulatory uncertainty

Technical & Security Risks: Bad data, hallucinations, cyber vulnerabilities

So there is a lot of risk. That is why boards are starting to get involved and AI projects often include a risk management work stream.

But I think every leader could benefit from developing a better understanding of the risks involved with AI. The solution isn't to avoid AI—it's to approach it systematically. So how do you do that?

There is a tool for that

In recent blog posts, we looked at how to use two familiar tools - process mapping and RACI charts - as starting points for reimagining work instead of end points for documentation. In this blog post, we will look at another tool that can do more than just meet documentation requirements. It is called Failure Mode and Effect Analysis (FMEA). FMEA is a structured method for identifying potential failures in a system, process, or product before they happen.

FMEA was first developed in the U.S. military in the late 1940s, but the framework really took off in the 1960s when NASA embraced it. By the 1980s and 1990s, FMEA had spread to the automotive and manufacturing industries and is a staple in most quality departments.

At its core, FMEA asks three key questions:

What could go wrong? (Failure Mode)

Why would it go wrong? (Cause)

What would happen if it did? (Effect)

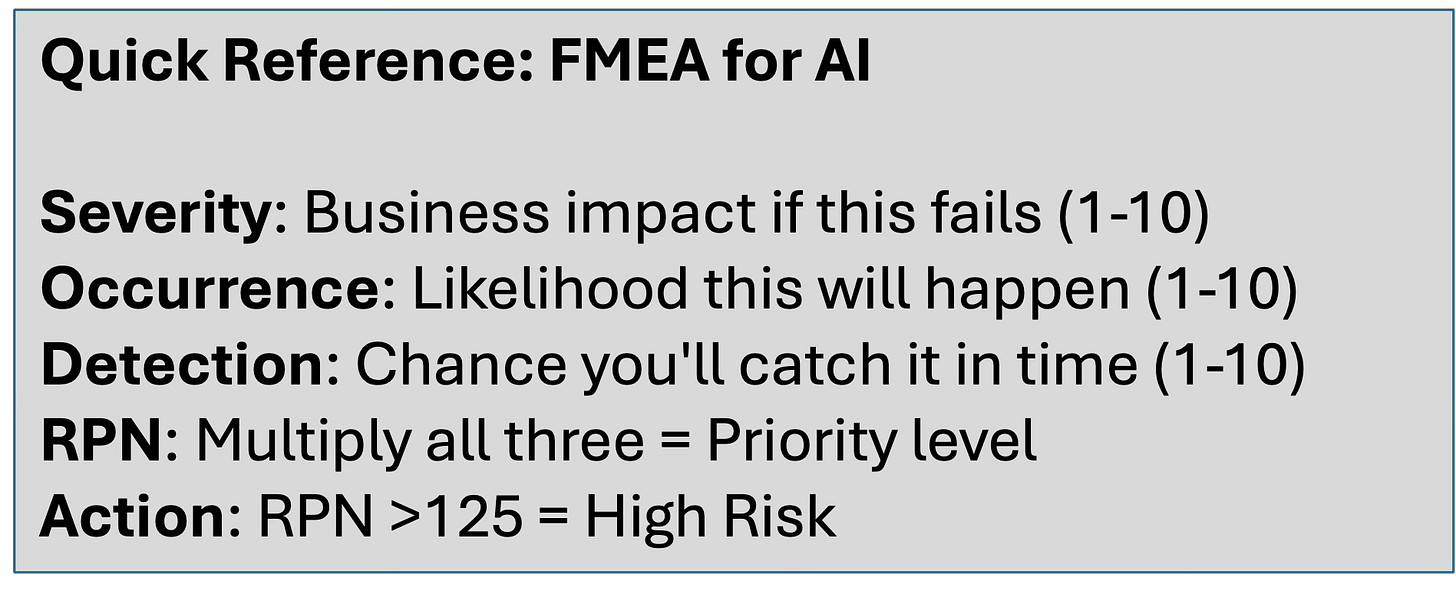

Each potential failure mode is then evaluated based on:

Severity: How bad would it be?

Occurrence: How likely is it to happen?

Detection: How likely are we to detect it?

That evaluation is based on a scale and combined into a Risk Priority Number (RPN) by multiplying the ratings for each. The higher the RPN, the more urgent it is to take action.

Say for example, you wanted to evaluate the potential risks of an AI-enabled applicant tracking system. One of the failure modes could be to reject qualified candidates. You are looking at two potential causes:

The application does not match key words in the job description

The application is rejected because the algorithm is biased against older candidates

Both lead to rejections, but rejecting qualified candidates could lead to legal challenges (effect), which would be rated higher in terms of severity (10) than poor key word matching (1).

If the bias is strong, occurrence will be likely rated high (8) compared to key word matching, which is job specific and probably moderate (6).

Let’s assume for both scenarios that the model you use does not have built in controls that would allow you to detect the issue (10).

In the first case, the RPN is 800 (10x8x10), whereas for the second case the RPN is 60 (1x6x10) - which would prompt you to focus on the more significant risk and think about ways you can mitigate the risks.

How to Use FMEA to Find Your Best AI Opportunities

As the example shows, FMEA can be used to identify and evaluate risks for specific AI applications - and as mentioned earlier, corporate technology teams and vendors tend to have frameworks in place for doing just that.

But there is another application: using FMEA to zero in on low risk use cases.

Say you are the head of HR for a mid-size company and your CEO just committed the company to an “AI first” approach. You know that you will be expected to come up with use cases for your department. You also know that obtaining funding for AI will be easier than making the case for additional headcount. You are eager to take advantage of the opportunity, but you are also worried about the risks and potential consequences - which can be severe. Nobody wants to end up on the front page of the Wall Street Journal because your AI system unlawfully discriminated.

Analyzing the various use cases using the FMEA framework will allow you to identify use cases with moderate risks:

Start with making a list of all the possible use cases for AI in your function.

For each use case, identify potential failure modes, underlying causes, and potential effects.

Then, evaluate each failure mode based on severity, occurrence, and detection and calculate the risk priority numbers.

The result will be a comprehensive view of the risks involved with each use case. Armed with this view, you can now make an informed decision about which use cases to pursue.

Say you looked at three use cases:

Screening applications

Handling employee questions about benefits

Recommending training courses

You might find that screening applications is quite risky (especially when using a black box model). Handling employee questions carries some risks, but those could be mitigated by forcing the system to reference company policies. Recommending training courses seems low risk - the worst thing that could happen is frustrate employees.

Just because there is a lot of risk does not mean you should not pursue it. The FMEA reveals the risks and enables leaders to make informed decisions according to their appetite for risk.

The Bottom Line

The companies that will dominate the AI era aren't necessarily the ones with the biggest AI budgets or the most ambitious projects. They're the ones that can deploy AI at scale without catastrophic failures—because they thought through the risks first.

Your competitors are likely moving fast and hoping for the best. You can move fast and smart. That's your competitive advantage.

Start today. Identify potential AI use cases. Run it through the FMEA framework. Make the invisible risks visible. Then decide where to invest and move forward with confidence and clarity.

AI offers tremendous opportunities, but only for organizations that can manage the risks thoughtfully. The FMEA framework can help leaders think through the risks involved with AI in a systematic and thoughtful way - and make smart choices about where to invest. The leaders who will succeed with AI aren't necessarily the ones who move fastest. They're the ones who move smartly, with clear eyes about both the potential and the pitfalls.

Our Level Up framework provides a structured approach to help you and your team identify opportunities, build capabilities, and implement changes that make work more productive, valuable, and motivating. From lightweight guidance to comprehensive transformation support, we offer flexible solutions that meet you and your team where you are. To learn more how we can help your team reach the next level of performance, join our upcoming webinar.

Excellent article. Glad I found another writer in the HR Talent space.

I love this! A very useful framework and easy to understand. There's definitely risk in rolling out AI without evaluating the risks. I wrote a post about change management communications when adopting AI. The messaging to employees matters a great deal because (you're so right) no one wants to be on "the front page of the Wall St. Journal" due to their AI adoption.

Anyway, here's the post if you're inclined to take a peek.

https://humandoingshumanbeings.substack.com/p/avoiding-the-ai-backlash-the-hidden